MADRID, June 19 (Portaltic/EP) –

The AI Fundamental Research team (FAIR) of Goal has announced new research models and tools aimed at the generation of music, images and the identification of voices generated with artificial intelligence (AI) to identify them with a watermark, which facilitates the community with an open approach.

Meta has shared the latest models which has been developed under a open scientific approach which include “image-to-text and text-to-music generation models, a multi-token prediction model, and a technique for detecting AI-generated speech,” as highlighted in a post on its official blog.

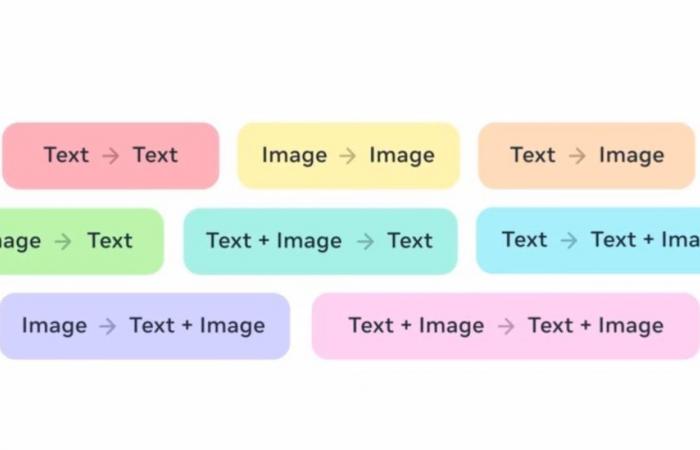

Chameleonintroduced in May, is a family of models that supports text and images, and a combination of both, as input and output, with a unified architecture for encoding and decoding.

Now FAIR has announced the availability of Chameleon 7B (7 billion parameters) and 34B (34,000 million parameters) under a research license modality. On the contrary, it has chosen not to release the image generator of this family.

FAIR has also provided a set of models based on a multi-token prediction approach for more efficient training of linguistic modelswith the ability to predict multiple future words at once instead of one at a time.

A third set of models is collected under the acronym JASCOwhich answers ‘set of audio and symbolic conditioning for temporally controlled text-to-music generation.’ With it, the company offers a tool for the generation of music from a text with several conditionssuch as specific chords or rhythms with improved control over the result.

These models are accompanied by AudioSeala watermark designed specifically for audio that detects the voice generated by an AI tool, even if they are segments in a larger audio file. Meta has provided it under a commercial license.

Lastly, and for mitigate geographic biases For text-to-image models, Meta has shared tools designed to help measure flaws in its models, including automatic ‘DIG In’ indicators that assess potential geographic disparities.